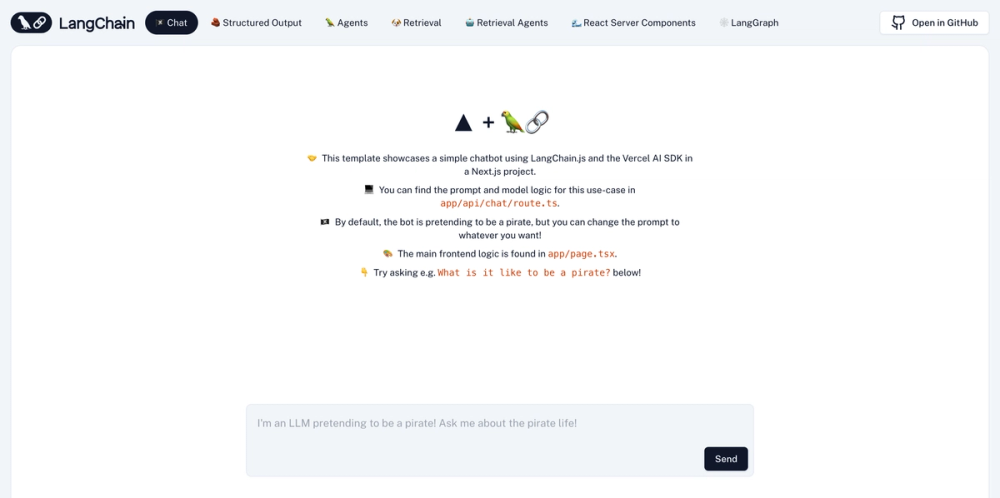

🦜️🔗 LangChain + Next.js Starter Template

This template scaffolds a LangChain.js + Next.js starter app. It showcases how to use and combine LangChain modules for several use cases:

- Simple chat

- Returning structured output from an LLM call

- Answering complex, multi-step questions with agents

- Retrieval augmented generation (RAG) with a chain and a vector store

- Retrieval augmented generation (RAG) with an agent and a vector store

Most of them use Vercel's AI SDK to stream tokens to the client.

The agents use LangGraph.js, LangChain's framework for building agentic workflows. They use preconfigured helper functions to minimize boilerplate, but you can replace them with custom graphs as desired.

It's free-tier friendly too! The bundle size for LangChain itself is quite small, using less than 4% of the total Vercel free tier edge function alottment of 1 MB.

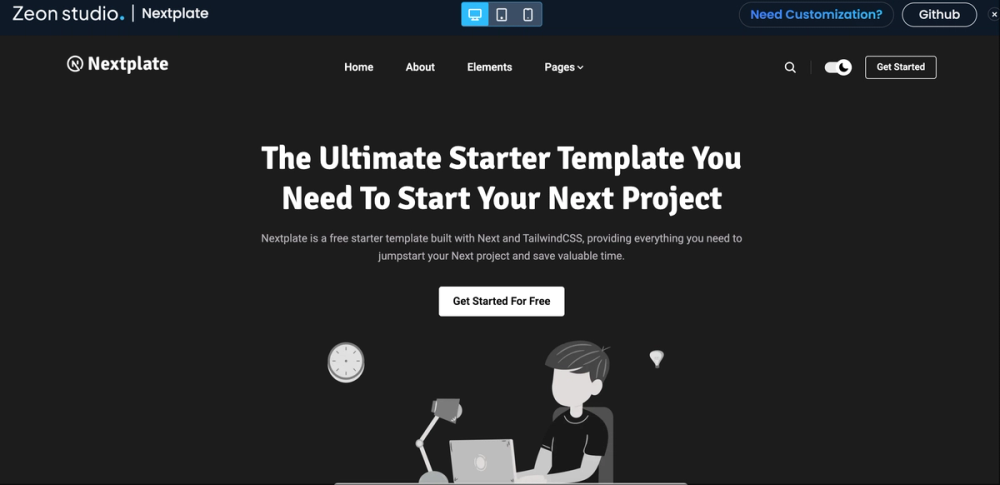

🚀 Getting Started

First, clone this repo and download it locally.

Next, you'll need to set up environment variables in your repo's .env.local file. Copy the .env.example file to .env.local.

To start with the basic examples, you'll just need to add your OpenAI API key.

Because this app is made to run in serverless Edge functions, make sure you've set the LANGCHAIN_CALLBACKS_BACKGROUND environment variable to false to ensure tracing finishes if you are using LangSmith tracing.

Next, install the required packages using your preferred package manager (e.g. yarn).

Now you're ready to run the development server:

yarn devOpen http://localhost:3000 with your browser to see the result! Ask the bot something and you'll see a streamed response.

You can start editing the page by modifying app/page.tsx. The page auto-updates as you edit the file.

Backend logic lives in app/api/chat/route.ts. From here, you can change the prompt and model, or add other modules and logic.

🧱 Structured Output

The second example shows how to have a model return output according to a specific schema using OpenAI Functions.

Click the Structured Output link in the navbar to try it out.

The chain in this example uses a popular library called Zod to construct a schema, then formats it in the way OpenAI expects.

It then passes that schema as a function into OpenAI and passes a function_call parameter to force OpenAI to return arguments in the specified format.

🦜 Agents

To try out the agent example, you'll need to give the agent access to the internet by populating the SERPAPI_API_KEY in .env.local.

Head over to the SERP API website and get an API key if you don't already have one.

You can then click the Agent example and try asking it more complex questions.

This example uses a prebuilt LangGraph agent, but you can customize your own as well.

🐶 Retrieval

The retrieval examples both use Supabase as a vector store. However, you can swap in another supported vector store if preferred by changing the code under app/api/retrieval/ingest/route.ts, app/api/chat/retrieval/route.ts, and app/api/chat/retrieval_agents/route.ts.

For Supabase, follow these instructions to set up your database, then get your database URL and private key and paste them into .env.local.

You can then switch to the Retrieval and Retrieval Agent examples. The default document text is pulled from the LangChain.js retrieval use case docs, but you can change them to whatever text you'd like.

For a given text, you'll only need to press Upload once. Pressing it again will re-ingest the docs, resulting in duplicates.

You can clear your Supabase vector store by navigating to the console and running DELETE FROM documents;.

After splitting, embedding, and uploading some text, you're ready to ask questions!

The specific variant of the conversational retrieval chain used here is composed using LangChain Expression Language, which you can read more about here. This chain example will also return cited sources via header in addition to the streaming response.